Invited Speakers

The following speakers have graciously accepted to give keynotes at AIST-2020.

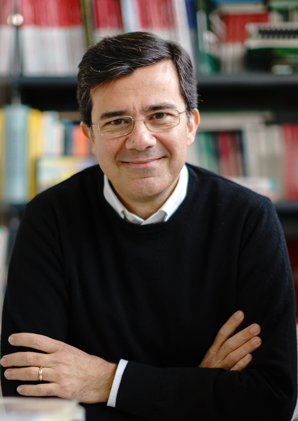

Marcello Pelillo

Graph-theoretic Methods in Computer Vision: Recent Advances

Abstract: Graphs and graph-based representations have long been an important tool in computer vision and pattern recognition, especially because of their representational power and flexibility. There is now a renewed interest toward explicitly formulating computer vision problems as graph problems. This is particularly advantageous because it allows vision problems to be cast in a pure, abstract setting with solid theoretical underpinnings and also permits access to the full arsenal of graph algorithms developed in computer science and operations research. In this talk I’ll describe some recent developments in graph-theoretic methods which allow us to address within a unified and principled framework a number of classical computer vision problems. These include interactive image segmentation, image geo-localization, image retrieval, multi-camera tracking, and person re-identification. The concepts discussed here have intriguing connections with optimization theory, game theory and dynamical systems theory, and can be applied to weighted graphs, digraphs and hypergraphs alike.

Miguel Couceiro

Making models fairer through explanations

Abstract: Algorithmic decisions are now being used on a daily basis, and based on Machine Learning (ML) processes that may be complex and biased. This raises several concerns given the critical impact that biased decisions may have on individuals or on society as a whole. Not only unfair outcomes affect human rights, they also undermine public trust in ML and AI.

In this talk we will address fairness issues of ML models based on decision outcomes, and we will show how the simple idea of “feature dropout” followed by an “ensemble approach” can improve model fairness. To illustrate we will revisit the case of “LimeOut” that was proposed to tackle “process fairness”, which measures a model’s reliance on sensitive or discriminatory features. Given a classifier, a dataset and a set of sensitive features, LimeOut first assesses whether the classifier is fair by checking its reliance on sensitive features using “Lime explanations”. If deemed unfair, LimeOut then applies feature dropout to obtain a pool of classifiers. These are then combined into an ensemble classifier that was empirically shown to be less dependent on sensitive features without compromising the classifier’s accuracy.

We will present different experiments on multiple datasets and several state of the art classifiers, which show that LimeOut’s classifiers improve (or at least maintain) on process fairness as well as on other fairness metrics, such as individual and group fairness, equal opportunity and demographic parity, among others.

Leonard Kwuida

On interpretability and similarity in concept based Machine Learning

Abstract: Machine Learning (ML) provides important techniques for classification and predictions. Most of these are black box models for users and do not provide decision makers with an explanation. For the sake of transparency or more validity of decisions, the need to develop explainable/interpretable ML-methods is gaining more and more importance. Certain questions need to be addressed:

- How does a ML procedure derive the class for a particular entity?

- item Why does a particular clustering emerge from a particular unsupervised ML procedure?

- What can we do if the number of attributes is very large?

- What are the possible reasons of the mistakes for concrete cases and models?

For binary attributes, Formal Concept Analysis (FCA) offers techniques in terms of intents of formal concepts, and thus provides plausible reasons for model prediction. However, from the interpretable machine learning viewpoint, we still need to provide decision makers with the importance of individual attributes to classification of a particular object, which may facilitate explanations by experts in various domains with high-cost errors like medicine or finance.

In this talk, we will discuss how notions from cooperative game theory can be used to assess the contribution of individual attributes in classification and clustering processes in concept-based machine learning. To address the 3rd question, we present some ideas how to reduce the number of attributes using similarities in large contexts.

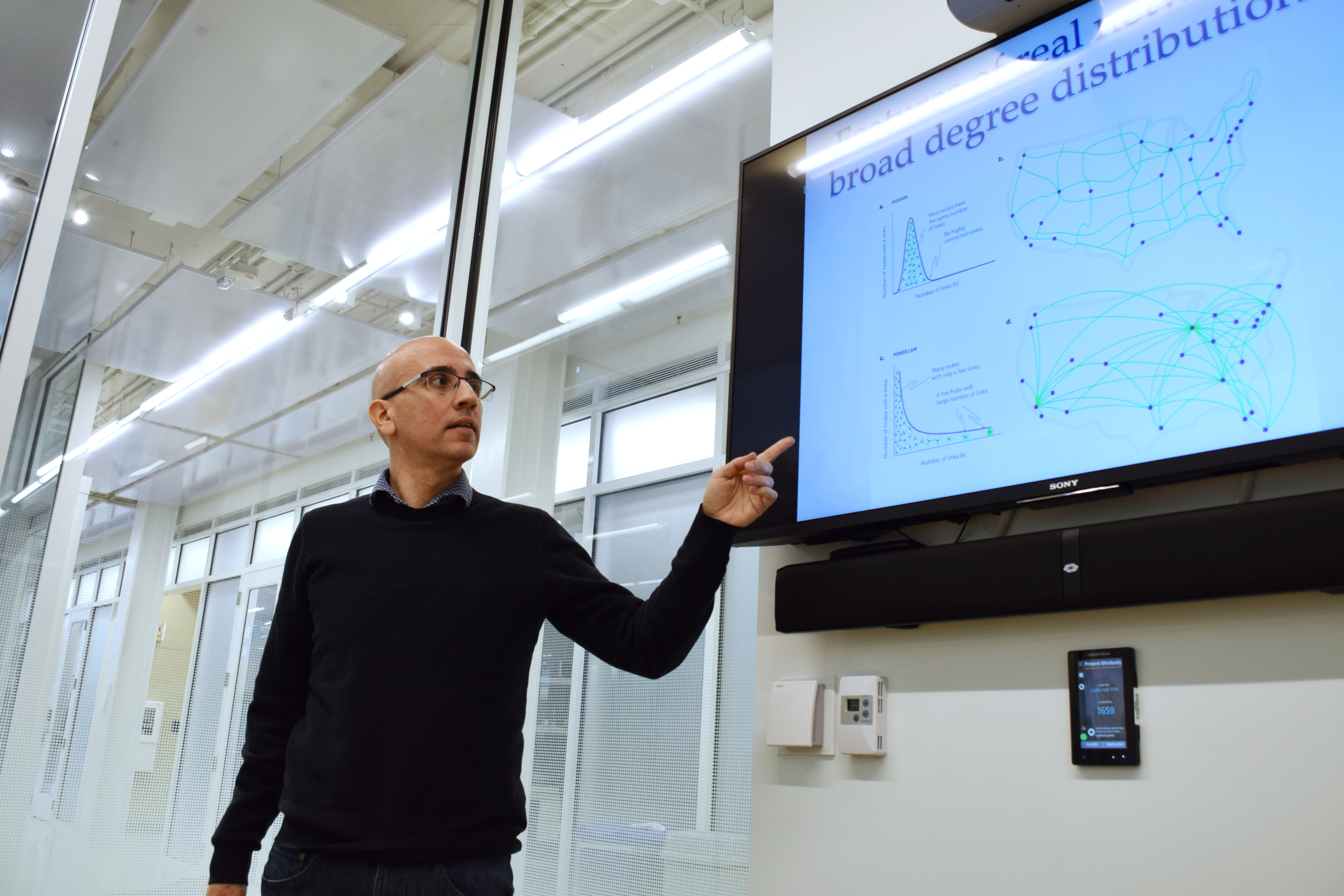

Santo Fortunato

Consensus clustering in networks

Abstract: Algorithms for community detection are usually stochastic, leading to different partitions for different choices of random seeds. Consensus clustering is an effective technique to derive more stable and accurate partitions than the ones obtained by the direct application of the algorithm. Here we will show how this technique can be applied recursively to improve the results of clustering algorithms.

The basic procedure requires the calculation of the consensus matrix, which can be quite dense if (some of) the clusters of the input partitions are large. Consequently, the complexity can get dangerously close to quadratic, which makes the technique inapplicable on large graphs. Hence we also present a fast variant of consensus clustering, which calculates the consensus matrix only on the links of the original graph and on a comparable number of additional node pairs, suitably chosen. This brings the complexity down to linear, while the performance remains comparable as the full technique. Therefore, the fast consensus clustering procedure can be applied on networks with millions of nodes and links.

Industry talks

Nikita Semenov

Text and speech processing projects at MTS AI

Abstract: How to build effective systems for processing and understanding speech in a large corporation. What challenges the researcher faces. It’s no secret that any solution has its own life cycle, including a solution based on natural language processing technologies. Let us dwell in particular on the components of the life cycle of solutions with NLP and ASR technologies.

Ivan Smurov

When conll-2003 is not enough: are academic NER and RE corpora well-suited to represent real-world scenarios?

Abstract: A lot of business applications require named entity recognition (NER) or relation extraction (RE). There exist several well-studied academic corpora. Scores obtained on these corpora are typically high. Taking recent advances in NER into account, one can even assume that it is a primarily solved task.

However, business applications seldom do enjoy the high scores reported in academia. One can theorize that the main reason for that is that both text sources and entities in industry and academia present with several noticeable differences.

In my talk, I will highlight the key differences between typical academic NER/RE corpus, and the data often dealt with in the industry. Further, I describe our attempt to bridge the gap between business and researchers by creating RuREBus corpus and conducting RuREBus shared task. Finally, I will provide some insights into the shared task results’ practical interpretation.